Debug CrashLoopBackOff From K8s Pods

.avif)

Table of Contents

Have you ever had a Kubernetes pod crash and then repeatedly fail to start again? If so, you're not alone. This common error is known as CrashLoopBackOff. The Botkube team put together this article to help troubleshoot this common Kubernetes error and also offer a possible easier solution to solving the error!

What is the CrashLoopBackOff Error?

CrashLoopBackOff occurs when a pod fails to start for some reason. Kubernetes will try to restart the pod, but if the pod continues to fail, Kubernetes will eventually give up and mark the pod as CrashLoopBackOff. It is not as much of a pod erroring out as a pod delaying its start to give time for a fix to be implemented.

If you're seeing the CrashLoopBackOff error, there are a few things you can do to troubleshoot the problem. First, check the pod's logs to see if there are any errors that might be causing the pod to fail. You can also use the command kubectl get pods to get more information about the pod, including its status and resources.

Image mentioned in a help forum on Microsoft's site about users switching to Kubernetes.

Common Causes of Pod CrashLoopBackOff

CrashLoopBackOff occurs commonly in Kubernetes pods. It indicates that a pod has repeatedly failed to start and is now being restarted automatically. There are a number of reasons why this might happen, including:

Docker Versioning Issue (Common on First K8s Deployment)

One common reason for the CrashLoopBackOff error is using an outdated version of Docker. This will happen often when first switching to a Kubernetes deployment. The app that is being switched over may be on an older version of Docker. The Docker version for the app and K8s pod must match in order to run the services correctly.

To check your Docker version, run the following command:

<code>docker -v</code>

If your Docker version is outdated, you can update it by running the following command:

<code>sudo apt update</code>

<code>sudo apt install docker-ce</code>

*It is a best practice to use the latest version of Docker to prevent deprecated commands and inconsistencies that can cause containers to start and fail repeatedly.

Memory Limits (OOMKilled Pod Error)

Another issue that could be causing the repeatedly crashing of your Kubernetes Pod could be memory. Each pod will have a limit to the amount of memory they are allowed to use. If actions within the pod cause memory usage to go over this limit, the pod will crash. Find out more on the OOMKilled Error for Kubernetes on our other learn article.

K8s repeatedly starting and running out of memory would trigger the CrashLoopBackOff eventually.

Issue Caused by Third-Party Services

If you go to start a pod and receive the caused by post error, then it is most likely a third-party issue. Here is what the error what that would look like:

<code>send request failure caused by: Post</code>

This mostly has to do with how users set up their DNS for any third party services. This will also effect other environment variables as well. It is recommended you troubleshoot this issue with a shell, and check kube-dns config for issues.

Pods Using the Same Port

Another possible cause of the CrashLoopBackOff error is a port conflict. This can happen when two containers are trying to use the same port. To check for a port conflict, you can run the following command:

<code>netstat -an | grep LISTEN</code>

This will show you a list of all ports that are currently in use. If you see a port that is in use by two different containers, this could be causing the CrashLoopBackOff error.

How to fix CrashLoopBackOff Kubernetes Pods

The Easy Way - with Botkube's K8s Tool

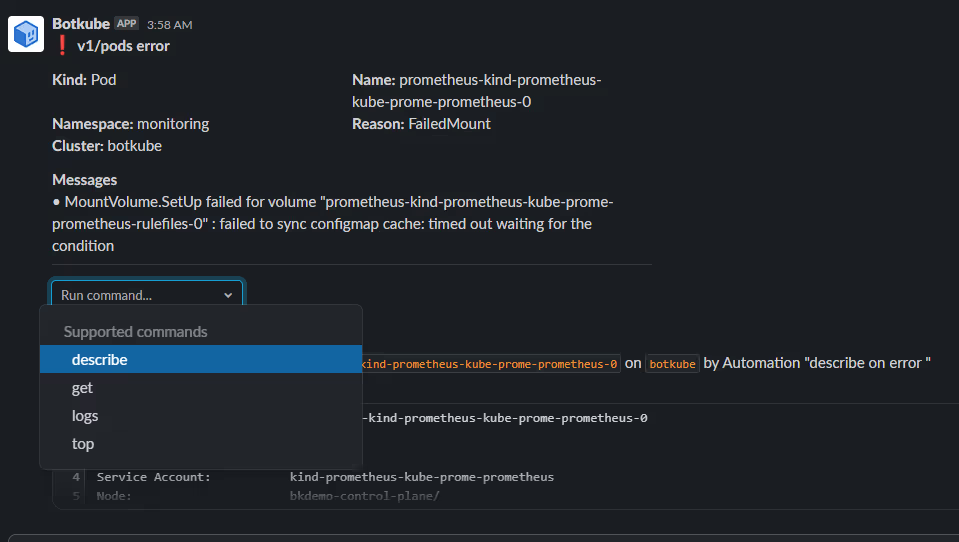

So if you truly intend on troubleshooting these pods instead of having them stuck in a restart loop, Botkube would speed the process up. Installing Botkube Cloud into your cluster allows for 3 timesaving K8s troubleshooting benifits:

1. The Kubernetes alert of Crashloopbackoff error happening would come directly into Slack or your preferred chat platform.

2. Between Botkube's own troubleshooting suggestions and prompting our new ChatGPT plugin, you receive suggestions of the best solution based on the error messages received.

3. Install executors, like our Kubectl commands, to one click run troubleshooting commands solving CrashLoopBackOff error without leaving Slack or Teams.

The Manual Way - Get Your Terminal Ready

Without our K8s AI assistant to help with Kubernetes audits, everything gets a little more manual. We will provide some troubleshooting tips to help here, but our team highly recommends using our troubleshooting tool! It just speeds up the troubleshooting process and provides more capabilities for feature K8s use cases.

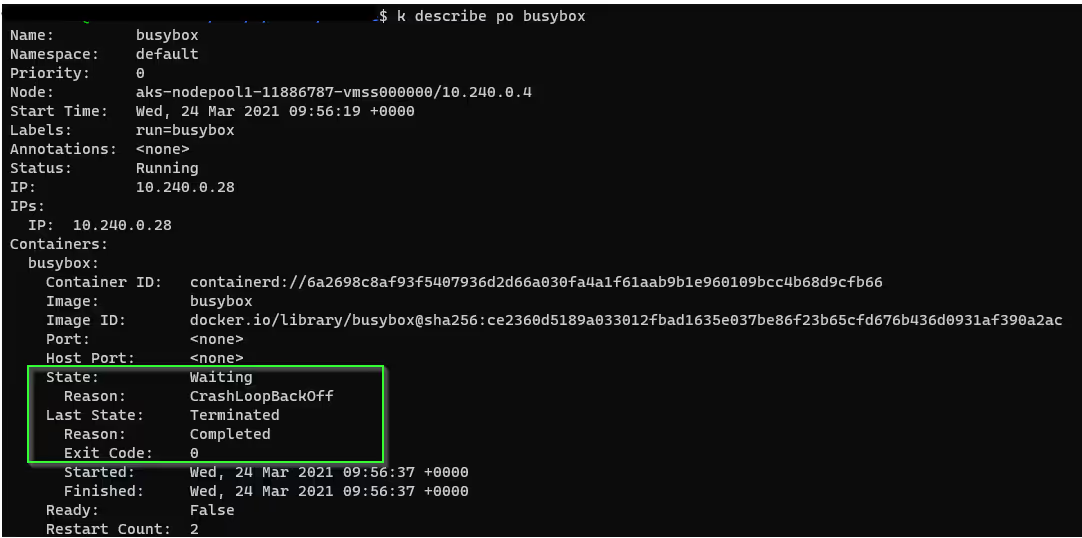

See if running <code>kubectl describe pod [name]</code> displays one of the following error messages:

- Liveness probe failed

- Back-off restarting failed container

These messages indicate that you are experiencing a temporary resource overload. This is often due to an activity spike. One solution is to adjust periodSeconds or timeoutSeconds to allow the application more time to process. This may resolve the issue or allow your application to work itself to another potential error.

If the error messages do not or no longer appear, you will need to check the pod logs.

To check a pods logs simply run the <code>kubectl logs --previous --tail 20</code>command and download the output. Now is where the true investigating work comes into Kubernetes. If it is not immediately obvious with the first 20 lines of logs, the command will have to be adjusted until an error log of value is found.

You are looking for OOMKilled logs or disconnect logs, something that would have caused the pod to fail/require restart. Our team would like to mention our cloud product here again as it takes the manual searching out of Kubernetes error logging.

One last log check, this time from the pod deployment.

At this point you have checked the last 20 lines, and tried to find a back-off restarting failed error. If neither of those show a solution, you will have to pull deployment logs. These can be easily pulled into a file with the <code>kubectl logs -f deploy/ -n </code>command.

These deployment logs may show other issues from within the cluster as well. Log for the file paths of your pod and everything that is being deployed there. Look for any error with deploying messages for numbers or names. Now search that code or name of the issue and try to fix the deployment issues.

About Botkube

Botkube is an AI-powered Kubernetes troubleshooting tool for DevOps, SREs, and developers. Botkube harnesses AI to automate troubleshooting, remediation, and administrative tasks— streamlining operations to save teams valuable time and accelerate development cycles. Botkube empowers both Kubernetes experts and non-experts to make complex tasks accessible to all skill levels.

Related topics:

.avif)

.avif)

.avif)

.avif)